SIMD Architectures and Instructions

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to SIMD Architectures

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're diving into SIMD Architectures. What do you think SIMD stands for?

Single Instruction, Multiple Data?

Exactly! SIMD processors allow a single instruction to simultaneously process multiple data elements. Can anyone explain why this is significant?

It increases speed, especially in tasks involving large datasets!

Perfect! That's right. SIMD architectures excel when handling repetitive operations on large data sets. Now, can you name any applications of SIMD?

Image processing, video encoding, things like that!

Great examples! Let's remember that SIMD is essential in many modern applications, especially those needing rapid computations.

Components of SIMD Architecture

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's discuss the components of SIMD architecture. What do you think vector units are?

Are they the hardware parts that execute SIMD instructions?

Correct! Vector units are specialized hardware components for executing vector instructions. Can anyone tell me about vector length?

It's the number of data elements a vector unit can process at one time, right?

Absolutely! The length determines the amount of data processed per cycle, which is crucial for performance. Remember, different systems may support different vector lengths.

SIMD Instructions

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s get into SIMD instructions. What are element-wise operations?

Operations like addition and multiplication that apply to each element in a vector.

Exactly! And these operations boost performance. Can anyone explain what gather/scatter operations do?

They help in loading and storing non-consecutive data efficiently, right?

Spot on! Gather/scatter operations provide flexibility in how memory is accessed, enhancing efficiency in complex data manipulations.

Performance Impact of SIMD

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's talk about performance. Why do you think SIMD is beneficial in reducing operational time for large datasets?

Because it processes multiple data elements simultaneously instead of one at a time!

Yes! This parallelism significantly accelerates tasks, especially those that repeat operations. Can someone give me an example where SIMD might be particularly useful?

In scientific simulations, where a lot of similar calculations are needed!

Exactly! SIMD architectures drastically speed up performance in applications requiring extensive calculations.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

In SIMD architectures, vector units and tailored instructions enable parallel operations on multiple data elements. The capabilities of SIMD hardware, including varying vector lengths and element-wise operations, enhance performance for large datasets across various computational tasks.

Detailed

SIMD Architectures and Instructions

Overview

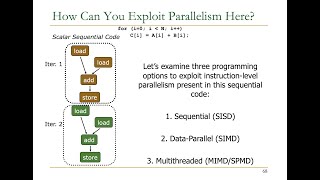

The SIMD (Single Instruction, Multiple Data) architecture is pivotal in enabling high-performance computing by allowing a single instruction to operate on multiple data points simultaneously. This section delves into the core components and functionality of SIMD architectures, underscoring their efficiency and application in various domains.

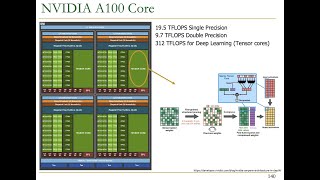

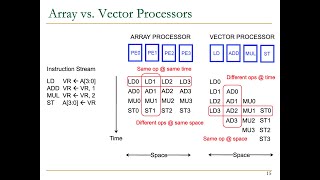

SIMD Hardware Architecture

- Vector Units: These are dedicated hardware components designed to execute vector instructions efficiently, allowing operations on several data elements at once.

- Vector Length: Refers to the size of the data chunks that can be processed in one instruction cycle, with modern SIMD hardware supporting lengths of 128-bit, 256-bit, or even 512-bit. The vector length directly affects the degree of parallelism achievable in computations.

SIMD Instructions

- Element-wise Operations: These operations apply the same function (e.g., addition, multiplication) across all elements in a vector simultaneously, enhancing throughput.

- Gather/Scatter Operations: These instructions enable flexible memory access by allowing non-contiguous elements to be loaded into vector registers, which is essential for complex data structures.

SIMD Performance

Due to the parallel processing capabilities, SIMD architectures significantly reduce operational time for large datasets, which is crucial in fields like scientific computing, graphics, and multimedia processing. By utilizing SIMD, applications can achieve substantial performance gains, streamlining computations that involve repetitive tasks.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

SIMD Hardware Architecture

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● SIMD Hardware Architecture:

○ Vector Units: Dedicated hardware units capable of executing vector instructions that operate on multiple data elements.

○ Vector Length: SIMD hardware may support different vector lengths, such as 128-bit, 256-bit, or 512-bit, determining how much data can be processed per cycle.

Detailed Explanation

SIMD hardware architectures are designed specifically to support the parallel processing of data using vector units. These vector units can execute multiple operations on data elements at once, allowing for increased throughput. Furthermore, the vector length of a SIMD unit refers to how many data elements can be processed simultaneously. The lengths of 128 bits, 256 bits, or 512 bits are common, with larger lengths allowing even more data to be processed in one go, which can lead to significant performance gains, especially for large-scale data tasks.

Examples & Analogies

Think of SIMD hardware architecture like a factory assembly line. The factory is designed with multiple workstations (vector units) where different components (data elements) are processed simultaneously. If one workstation can handle two products at once (like 128-bit), you can increase efficiency by adding more workstations that can handle even more products at a time (like 256-bit or 512-bit). As a result, the factory can produce more products in the same amount of time.

SIMD Instructions

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● SIMD Instructions:

○ Element-wise Operations: Operations that are applied to each data element in a vector, such as addition, multiplication, and logical comparisons.

○ Gather/Scatter Operations: Allows non-contiguous memory elements to be loaded into vector registers, enabling more flexible memory access patterns.

Detailed Explanation

SIMD instructions are specialized commands designed to optimize operations on data using the SIMD architecture. Element-wise operations refer to performing the same mathematical operation on each element of a vector. For example, if you have two arrays of numbers, SIMD can add corresponding elements from both arrays in a single instruction. Gather/scatter operations allow the processor to load and store non-contiguous data from memory. This means that data does not have to be stored next to each other in memory, making it easier to work with more complex data structures.

Examples & Analogies

Imagine you're baking cookies and have a tray where you can place multiple cookies at once. Element-wise operations are like putting the same amount of dough on each spot of the tray — for instance, if you're adding chocolate chips, you can put them on every cookie at the same time. Gather/scatter operations are akin to taking your cookie dough from different containers that are spread out on the table; you can gather just the right amount from each without needing to line them up perfectly (non-contiguous) before you bake.

SIMD Performance

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● SIMD Performance: SIMD achieves high performance by processing multiple data elements in parallel, significantly reducing the time required for operations on large datasets.

Detailed Explanation

The performance benefits of SIMD come from its ability to execute multiple operations in parallel rather than sequentially. This means that when you need to perform the same operation across large datasets, SIMD can do this in significantly less time compared to traditional processing methods. By employing wider vector registers and specialized instructions, SIMD can break down complex tasks into smaller parallelizable operations, leading to improved computational efficiency and speed.

Examples & Analogies

Consider a team of movers compared to an individual. If you need to move a house full of furniture, a single mover (traditional processing) can only carry one piece at a time, making the process lengthy. However, a whole team of movers (SIMD) can each grab an item and carry it simultaneously, drastically reducing the overall time to move everything. The more movers you have, the quicker the house is empty!

Key Concepts

-

Vector Units: Specialized hardware for executing vector instructions.

-

Vector Length: Determines how much data can be processed at once.

-

Element-wise Operations: Operations applied individually to each data in a vector.

-

Gather/Scatter Operations: Enables flexible access to memory, allowing non-contiguous data access.

Examples & Applications

In multimedia applications, SIMD can process multiple pixels simultaneously for image adjustments.

Scientific simulations can utilize SIMD to perform the same mathematical operations on large arrays of data concurrently.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

SIMD helps us work with speed, processing data is what we need!

Stories

Imagine a factory that produces cars. Instead of building them one at a time, SIMD allows several cars to be constructed simultaneously, improving efficiency and speed!

Memory Tools

Remember 'SIMPLE' for SIMD: S - Single Instruction, I - Instructions, M - Multiple, P - Processing, L - Large data, E - Efficiency.

Acronyms

Use 'V-FAST' to recall things about vector units - V for Vector, F for Fast, A for Accessing multiple, S for Simultaneous, T for Tasks.

Flash Cards

Glossary

- SIMD

Single Instruction, Multiple Data; a parallel computing method that processes multiple data points simultaneously with a single instruction.

- Vector Units

Dedicated hardware components designed to execute vector instructions in SIMD architectures.

- Vector Length

The size of data in bits that can be processed in a single instruction cycle; commonly 128-bit, 256-bit, or 512-bit.

- Elementwise Operations

Operations that are performed on each element of a vector, such as addition, multiplication, and comparison.

- Gather/Scatter Operations

Instructions that allow for flexible loading and storing of non-contiguous memory elements into vector registers.

Reference links

Supplementary resources to enhance your learning experience.