GPUs for Machine Learning

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Architectural Advantages of GPUs

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's explore the architectural advantages of GPUs. Unlike CPUs, which excel in single-threaded performance, GPUs are designed for massive parallel processing. They contain hundreds or thousands of small cores that can execute many threads simultaneously.

Why does that matter for machine learning?

Great question! In machine learning, especially deep learning, we deal with large datasets that require heavy computations. The ability of GPUs to process many pieces of data at once drastically speeds up these computations.

Can you give an example of what kind of tasks they speed up?

Sure! Operations like matrix multiplication and convolution are critical in training neural networks. GPUs can handle these tasks much faster than CPUs due to their parallel nature.

So, the more cores a GPU has, the better it is for machine learning?

Exactly! The number of cores allows a GPU to manage more operations simultaneously, which is essential for the large scale of computations in deep learning. To remember, think ‘More Cores = More Speed’ for ML tasks!

Got it! More cores help with speed. What other advantages do GPUs have?

Another advantage is their ability to handle slightly different tasks at the same time. This makes them flexible for various types of machine learning operations. Remember: GPUs = Versatility in ML! Let's summarize: GPUs excel with many cores, speeding up operations like matrix multiplication necessary for deep learning.

General-Purpose GPUs (GPGPUs)

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let’s talk about General-Purpose GPUs, or GPGPUs. These are GPUs that have been adapted to serve a broader range of applications beyond just rendering graphics. Can anyone name a technology that allows this?

Is it CUDA from NVIDIA?

Yes, precisely! CUDA allows developers to run parallel computations on GPUs for tasks like scientific simulations and, of course, machine learning. It provides a programming interface for this.

What about AMD? Do they have something similar?

Absolutely! AMD has ROCm, which stands for Radeon Open Compute. Both allow harnessing the power of GPUs for general computations and are pivotal for machine learning tasks.

So, it's not just gaming and graphics anymore?

Correct! Today, GPUs are essential in AI, machine learning, and other computationally heavy tasks. Remember: GPGPUs have gone beyond ‘graphics’ to ‘general-purpose’!

That’s an important shift in technology!

Indeed! The flexibility and power of GPGPUs aid in the exponential growth of machine learning capabilities. Summing it up: GPGPUs are powerful tools powered by technologies like CUDA and ROCm for versatile applications in ML.

Applications in Deep Learning

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s dive into how GPUs specifically accelerate deep learning. When training neural networks, the speed and efficiency of computation become crucial. How do you think GPUs help in this process?

They can process many calculations at once, right?

Exactly! Tasks like matrix multiplication are vital in neural network training. Each multiplication can be done independently, making them perfect for GPUs.

And what about convolution? We often hear about it in deep learning.

Indeed! Convolutional operations in CNNs are also parallelized, allowing for swift image processing essential in AI applications, such as object detection or image classification.

Interesting! So, using GPUs can drastically reduce training times for models?

Precisely! Training that might take weeks could potentially be reduced to just days or even hours. Think of it like having an efficient multi-car lift in a busy parking garage—it speeds up the process of fitting cars into spaces!

That’s a cool analogy! So, is that the main reason GPUs are preferred for deep learning?

Yes! The ability of GPUs to handle vast amounts of calculations quickly is why they are preferred for deep learning applications. Summarizing this session: GPUs enhance deep learning through fast parallel processing, dramatically reducing training times for neural networks.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

GPUs, originally designed for rendering graphics, have become increasingly important in machine learning due to their capability to accelerate various complex operations. Their structure allows them to handle parallel computations effectively, making them ideal for tasks such as matrix multiplication and convolution operations commonly used in deep learning.

Detailed

GPUs for Machine Learning

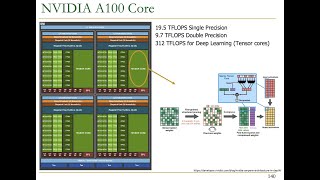

GPUs (Graphics Processing Units) have revolutionized the field of machine learning, particularly in deep learning applications where large datasets and complex computations are commonplace. The architecture of GPUs, with thousands of small cores focused on parallel processing, makes them ideally suited for executing intensive tasks like matrix multiplications and convolution operations.

Key Points Covered:

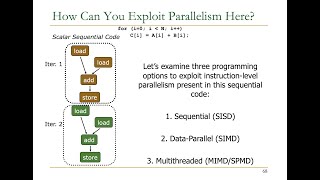

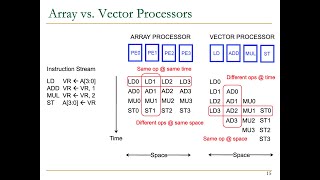

- Architectural Advantages: Unlike CPUs designed for general-purpose tasks and single-threaded performance, GPUs are optimized for massive parallelism. They can execute thousands of threads simultaneously, which is essential for training deep learning models that require processing multiple data points at once.

- General-Purpose GPUs (GPGPUs): Modern GPUs transcend their original purpose of graphics rendering. Technologies like NVIDIA's CUDA and AMD's ROCm allow them to serve in diverse applications, including deep learning and scientific simulations.

- Application in Deep Learning: Tasks such as training neural networks are accelerated through the efficient parallel computation capabilities of GPUs, dramatically reducing training times and enabling the handling of larger datasets.

In summary, the significance of GPUs in machine learning lies in their ability to perform vast amounts of calculations simultaneously, making them indispensable in current AI development.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Overview of GPU Usage in Machine Learning

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

GPUs are commonly used for accelerating machine learning models, particularly deep learning, where tasks such as matrix multiplication and convolution operations can be parallelized.

Detailed Explanation

This chunk highlights the importance of GPUs in machine learning, especially in deep learning applications. GPUs are designed to handle high levels of parallel processing, which allows them to quickly perform many operations at once. In machine learning, tasks like matrix multiplication and convolution are crucial because they involve processing large amounts of data and can benefit greatly from parallel execution. By utilizing GPUs, these tasks can be completed more efficiently compared to traditional CPUs.

Examples & Analogies

Imagine you are in a bakery where each pastry chef is responsible for making one type of pastry. If you only have one chef, it's going to take a long time to get everything done, because each chef can only make one pastry at a time. Now, if you bring in many more chefs (like using a GPU), each chef can work on different pastries simultaneously, allowing you to produce more pastries in a shorter amount of time. This is similar to how GPUs help speed up machine learning processes!

Parallelization of Matrix Operations

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Tasks such as matrix multiplication and convolution operations can be parallelized.

Detailed Explanation

Matrix multiplication and convolution are foundational operations in many machine learning models, especially neural networks. When dealing with large matrices, the computations needed for these operations can be numerous. GPUs excel in performing a large number of these calculations at the same time, due to their architecture which supports multiple cores that can execute thousands of threads in parallel. This capability allows for significant reductions in training time for machine learning models.

Examples & Analogies

Think of matrix multiplication like a giant puzzle where each piece represents a number. To solve the puzzle (multiply the matrices), instead of one person working on it alone, you have many people each working on different sections of the puzzle at the same time. This collaborative effort leads to much faster completion of the puzzle, just like GPUs speed up matrix operations in machine learning.

Key Concepts

-

GPUs: Specialized processors for parallel computations.

-

GPUs in ML: Accelerate tasks like matrix multiplication and convolution.

-

GPGPUs: GPUs used beyond graphics for general-purpose tasks.

-

CUDA: A programming model for general-purpose GPU computing.

Examples & Applications

Training a convolutional neural network (CNN) on a dataset like MNIST is much faster with a GPU due to its ability to perform numerous calculations in parallel.

Using GPUs for training language models in natural language processing can significantly reduce training time from weeks to days.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

When neural nets are quite profound, GPUs help spread the workload round.

Stories

Imagine a busy kitchen. The CPU is like one chef managing a single dish, while the GPU is a team of chefs each handling different ingredients. Together, they prepare a feast, but with GPUs, the feast is ready in record time.

Memory Tools

Remember the acronym 'CUDA': Compute on the Unified Device Architecture for effective GPU use.

Acronyms

GPGPU = General Purpose, Great Performance under GPU utilization!

Flash Cards

Glossary

- GPU

Graphics Processing Unit, a specialized processor designed for rapidly rendering images and performing complex calculations in parallel.

- GPGPU

General-Purpose Graphics Processing Unit, refers to GPUs used for applications beyond graphics, including scientific computing and machine learning.

- CUDA

Compute Unified Device Architecture, a parallel computing platform and programming model created by NVIDIA for general-purpose computing on GPUs.

- Matrix Multiplication

A mathematical operation that produces a matrix from two matrices, widely used in machine learning algorithms.

- Convolution

A mathematical operation that combines two functions to produce a third function, used extensively in image processing and neural networks.

Reference links

Supplementary resources to enhance your learning experience.