Graphics Processing Units (GPUs)

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to GPU Architecture

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today we'll explore the architecture of Graphics Processing Units, or GPUs. These are specialized hardware accelerators designed to perform high-speed operations on large datasets. They consist of many small processing cores that execute tasks in parallel.

How do these cores differ from the ones in a CPU?

Great question! Unlike CPUs which focus on high single-threaded performance, GPUs are built for parallelism, allowing them to handle many threads at once. This is crucial for operations like graphics rendering, where multiple pixel data can be processed simultaneously.

So, does that mean GPUs can handle larger datasets more efficiently?

Exactly! GPUs maximize throughput by processing blocks of data in parallel, making them incredibly efficient for applications such as scientific simulations.

To remember this architecture, think 'Many Cores, High Parallelism' — it encapsulates their design intent.

I like that! It’s easy to remember!

Let's summarize: GPU architecture emphasizes many small cores working in parallel, enhancing efficiency with large datasets.

General-Purpose GPUs (GPGPUs)

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now we’ll talk about General-Purpose GPUs, or GPGPUs. These are modern GPUs capable of handling workloads beyond graphics. Can anyone guess some applications?

Like machine learning?

Absolutely! GPGPUs are significantly used in machine learning, artificial intelligence, and scientific computations. For instance, frameworks like CUDA allow parallel processing of complex algorithms.

So, is CUDA only for NVIDIA GPUs?

Yes, CUDA is specifically designed for NVIDIA's architecture. It's pivotal for developers wanting to run parallel applications effectively on these GPUs. Remember: 'CUDA = Compute & Data Unified for Acceleration.'

That’s a catchy phrase!

In summary, GPGPUs allow the use of GPU architecture in a vast array of computational tasks beyond graphics.

GPU vs. CPU

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s compare GPUs and CPUs. Can anyone highlight a primary difference?

CPUs focus on single-threaded performance, whereas GPUs handle many threads simultaneously.

Exactly! This massive parallelism of GPUs is perfect for tasks requiring simultaneous processing. Think of it as swift multitasking!

So GPUs are better for tasks like gaming and simulations?

Yes! They execute simple operations on large datasets effectively, such as matrix and vector calculations. Just remember: 'GPU for Graphics and Gigabytes'—it’s not just about visuals.

Got it! It’s all about handling complexity in data.

Let's wrap up: GPUs excel in processing many data points at once, vastly improving efficiency for parallel tasks over CPUs.

Applications of GPUs in Machine Learning

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, we will focus on how GPUs accelerate machine learning. Why do we think they are so pivotal in this area?

Because they can process data in parallel, right?

Correct! Tasks like matrix multiplication, which form the backbone of neural networks, can be performed simultaneously with GPUs.

Could you give an example of this?

Certainly! During the training of a deep learning model, huge datasets are fed into the network where large matrix operations are performed. GPUs handle these efficiently, enhancing speed and performance dramatically. Remember: 'Fast Learning with Parallel Processing.’

Sounds like a game changer!

So in conclusion, GPUs greatly enhance machine learning tasks through their inherent parallel processing ability.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section discusses GPUs as essential components in modern computing, outlining their architecture, capabilities for general-purpose computing, and their comparison with CPUs. It highlights the importance of GPUs in accelerating tasks like machine learning, where their massive parallelism is leveraged for efficient computation.

Detailed

Graphics Processing Units (GPUs)

Graphics Processing Units (GPUs) are specialized hardware accelerators distinguished by their ability to execute a multitude of tasks simultaneously, making them indispensable for applications that require extensive parallel processing, such as graphics rendering, scientific simulations, and machine learning.

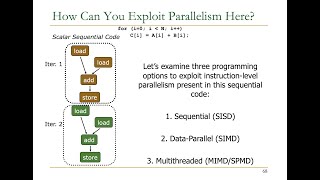

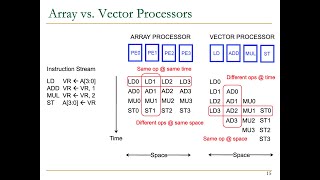

GPU Architecture

The architecture of GPUs is characterized by having hundreds to thousands of small processing cores. These cores are optimized for SIMD (Single Instruction, Multiple Data) operations which allow them to process large datasets efficiently by executing the same instruction across numerous data elements in parallel.

General-Purpose GPUs (GPGPUs)

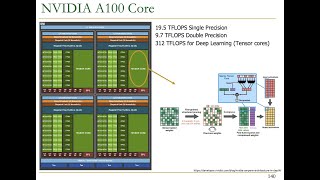

Modern GPUs, referred to as General-Purpose GPUs (GPGPUs), support versatile parallel workloads extending beyond traditional graphics tasks. Platforms like NVIDIA's CUDA and AMD's ROCm have enabled GPUs to perform computations for various applications including deep learning and artificial intelligence.

Comparison: GPU vs. CPU

Unlike CPUs that are designed for single-threaded performance, GPUs excel in parallel processing, capable of executing thousands of threads simultaneously. This massive parallelism enables GPUs to handle tasks involving simple operations over large datasets, such as matrix computations in deep learning.

CUDA

CUDA (Compute Unified Device Architecture) is NVIDIA's platform that facilitates programmers to tap into the power of GPUs to accelerate computational tasks through an accessible programming model, primarily using languages like C, C++, and Fortran.

GPUs in Machine Learning

GPUs play a crucial role in machine learning, especially in training deep neural networks, where operations such as matrix multiplication can be parallelized to enhance performance significantly.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Overview of GPUs

Chapter 1 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

GPUs are specialized hardware accelerators designed to handle large-scale parallel computations, making them ideal for tasks such as graphics rendering, scientific simulations, and machine learning.

Detailed Explanation

GPUs, or Graphics Processing Units, are specially designed to perform many calculations simultaneously. Unlike CPUs (Central Processing Units) that are optimized for a few tasks at high speed, GPUs can manage thousands of processes at once. This makes them extremely efficient for operations that require processing large amounts of data concurrently, such as generating images or running complex simulations in scientific research.

Examples & Analogies

Think of a CPU like a single chef in a restaurant who can cook one meal at a time, while a GPU is like a bustling kitchen team, where many chefs work together to prepare multiple meals at the same time. This teamwork allows for faster service and the ability to handle many customers (or tasks) at once.

GPU Architecture

Chapter 2 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

GPUs consist of hundreds or thousands of small processing cores, each capable of executing instructions in parallel. These cores are optimized for SIMD-style operations, enabling them to process large datasets efficiently.

Detailed Explanation

The architecture of a GPU includes a high number of small cores specifically designed for performing tasks in parallel. Each core can execute its own instruction simultaneously, allowing the GPU to process many data operations at once. This SIMD (Single Instruction, Multiple Data) style of operation means that one instruction can be applied to multiple pieces of data simultaneously, greatly increasing efficiency for tasks that can take advantage of parallelism.

Examples & Analogies

Imagine a factory where every worker is assigned the same task, like assembling parts on a production line. If there are hundreds of workers (GPU cores) all working on the same assembly task, products can be completed much faster than if a single worker (CPU core) was doing it all alone.

General-Purpose GPUs (GPGPUs)

Chapter 3 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Modern GPUs, such as NVIDIA's CUDA and AMD's ROCm, have evolved into powerful general-purpose processors capable of running parallel workloads for a variety of applications beyond graphics, including deep learning, artificial intelligence, and scientific simulations.

Detailed Explanation

General-Purpose GPUs (GPGPUs) extend the functionality of standard GPUs beyond just graphics processing. They can now handle a wider array of computational tasks in fields like machine learning and scientific research. For example, frameworks like NVIDIA's CUDA allow developers to write programs that perform computations in parallel, utilizing the power of the GPU for various applications that require intensive processing.

Examples & Analogies

Think of a GPU that can throw a great party by making beautiful decorations and serving food (graphics rendering), but also one that can help solve complex problems (like planning the party logistics or budgeting for it) with the same efficiency using its organizational skills (GPGPU functionalities).

GPU vs. CPU

Chapter 4 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

CPUs are designed for single-threaded performance and general-purpose computation, whereas GPUs are designed for parallelism and can execute thousands of threads simultaneously.

Detailed Explanation

The main difference between CPUs and GPUs lies in their design and capabilities. CPUs are optimized for tasks that require high single-thread performance, suitable for running operating systems and complex software. In contrast, GPUs excel at parallel processing, capable of executing many threads and handling tasks that can be divided into smaller, simultaneous operations. This makes GPUs significantly more efficient for specific high-demand tasks like graphical rendering or machine learning tasks.

Examples & Analogies

Imagine a school where the principal (CPU) focuses on managing the overall administration effectively but can oversee only a few students at a time. Meanwhile, a group of teaching assistants (GPU) can each handle a number of students in various subjects at the same time, helping ensure everyone learns efficiently. The CPU runs the school, while the GPU enhances the educational experience by tackling many students' needs at once.

CUDA (Compute Unified Device Architecture)

Chapter 5 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

CUDA is a parallel computing platform and programming model developed by NVIDIA that allows developers to harness the power of GPUs for general-purpose computing tasks. It provides a programming interface for writing GPU-accelerated applications using C, C++, and Fortran.

Detailed Explanation

CUDA is an innovation by NVIDIA that allows programmers to develop software that utilizes the processing power of GPUs for a variety of tasks, not limited to just graphics rendering. Using languages like C, C++, and Fortran, developers can write code that leverages the massive parallel processing capabilities of GPUs, making it possible to tackle complex problems efficiently, such as in data analysis or machine learning applications.

Examples & Analogies

Think of CUDA as a set of special tools given to a carpenter (developer); these tools are specifically designed to allow the carpenter to build structures much faster using a large crew (GPU cores). Instead of using just a hammer (CPU), the carpenter can now use a complete construction kit to handle multiple construction tasks at once efficiently.

GPUs for Machine Learning

Chapter 6 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

GPUs are commonly used for accelerating machine learning models, particularly deep learning, where tasks such as matrix multiplication and convolution operations can be parallelized.

Detailed Explanation

In machine learning, especially deep learning, GPUs significantly speed up the training and inference processes. This is because many operations, like matrix multiplications and convolutions that are common in neural network calculations, can be executed in parallel on a GPU. This parallel capability drastically reduces the time needed to train machine learning models, allowing researchers and engineers to iterate faster and improve their algorithms.

Examples & Analogies

Consider a chef training a team of kitchen helpers to prepare a large meal. When the chef uses a few helpers (CPU), they can prepare one dish at a time, taking a long time to finish. However, if the chef brings in many helpers (GPUs), they can prepare numerous dishes all at once, making the overall cooking process much faster, similar to how GPUs speed up the training of deep learning models.

Key Concepts

-

GPU Architecture: Characterized by many small cores optimized for parallel processing.

-

GPGPUs: Modern GPUs capable of performing a variety of tasks beyond just graphics.

-

CUDA: NVIDIA's platform for general-purpose programming on GPUs.

-

Massive Parallelism: Key distinguishing feature of GPUs compared to CPUs.

Examples & Applications

Using GPUs to train deep neural networks in machine learning, where matrix multiplications are done in parallel.

Graphics rendering in video games where multiple pixels are processed simultaneously.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

GPUs handle blocks of many, helping data move in a frenzy.

Stories

Imagine a factory with many workers (GPU cores) processing products (data) simultaneously versus just a few highly skilled workers doing everything (CPU). The factory works better with many!

Memory Tools

For GPGPU, think 'General Purpose GPU,' expanding roles beyond just graphics.

Acronyms

CUDA = C, D for Data, U for Unified, A for Acceleration, remind with 'Unified GPU Power'

Flash Cards

Glossary

- GPU

A specialized hardware accelerators that can perform many calculations simultaneously, mainly used for rendering graphics and accelerating computations in various applications.

- GPGPU

General-Purpose Graphics Processing Unit; a GPU that can handle non-graphic workloads.

- CUDA

Compute Unified Device Architecture; a parallel computing platform and programming model developed by NVIDIA for general-purpose computing on GPUs.

- SIMD

Single Instruction, Multiple Data; a parallel computing method where a single instruction helps process multiple data elements simultaneously.

- Throughput

The amount of data processed by a system in a given amount of time.

Reference links

Supplementary resources to enhance your learning experience.