CUDA (Compute Unified Device Architecture)

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to CUDA

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're diving into CUDA, or Compute Unified Device Architecture. It's a platform by NVIDIA that allows programmers to harness the power of GPUs for parallel computing tasks. Can anyone tell me why CUDA might be beneficial for developers?

Maybe because GPUs can do many calculations at once?

Exactly! This ability to perform many operations simultaneously is what makes CUDA powerful. It lets developers write code that can significantly speed up applications, especially in machine learning and scientific computation.

So, do you need to learn a new language for CUDA?

Good question! CUDA allows you to use C, C++, and Fortran—languages many developers are already familiar with. This makes it accessible to more programmers.

Is it only for graphics tasks?

Great clarification! Although it started with graphics, CUDA has expanded to a wide range of applications, particularly in deep learning where large data sets are common. Remember, CUDA is essentially about maximizing performance by leveraging GPU capabilities.

Can CUDA be used for anything else besides machine learning?

Absolutely! CUDA is also used in scientific simulations, biomedical research, and even in financial modeling. Its versatility is key to its success.

So, to summarize, CUDA is a platform that enhances GPU computing capabilities, using familiar programming languages to allow developers to explore parallel computing across several fields.

How CUDA Works

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's delve into how CUDA actually works. When using CUDA, how do you think tasks are divided between CPU and GPU?

I think the CPU does the important calculations, and the GPU does all the repetitive stuff.

That's nearly spot on! The CPU typically handles sequential tasks—those that can’t be parallelized. Meanwhile, the GPU takes on parallelizable tasks, allowing it to perform many operations concurrently.

What kind of tasks does CUDA excel at specifically?

Good point! CUDA excels at tasks like matrix calculations, image processing, and simulations—anything involving large data sets with repeated operations is ideal.

How does a developer implement CUDA in their code?

Developers write GPU kernels written in C or C++. These are functions that run on the GPU and are invoked from the host CPU. Understanding how to efficiently manage memory and execution is key to maximizing performance.

To recap: CUDA allows efficient task division between CPU and GPU, focusing on maximizing operations where it's most beneficial.

Applications of CUDA

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let's talk about the applications of CUDA. Can someone name a field where CUDA is heavily utilized?

How about in gaming for graphics rendering?

Yes, indeed! Gaming is a prominent area. CUDA also shines in deep learning tasks, especially in training neural networks. Can anyone provide an example?

TensorFlow and PyTorch both use CUDA to speed up training, right?

Absolutely! These frameworks rely on CUDA to handle intensive computations more efficiently. Any other industries?

Healthcare, for simulations or analysis of medical data?

Exactly! It’s also utilized in fields like finance for risk assessments and simulations. The versatility speaks to its importance in tech today.

In conclusion, CUDA’s efficiency in various domains—from gaming to healthcare—highlights its role as a transformative tool in modern computing scenarios.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

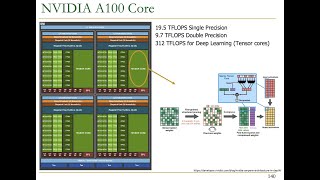

CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by NVIDIA, enabling developers to harness GPU processing capabilities for complex computations. It facilitates the development of C, C++, and Fortran applications that can leverage the massively parallel architecture of modern GPUs.

Detailed

CUDA (Compute Unified Device Architecture)

CUDA is a revolutionary parallel computing platform and programming model created by NVIDIA, specifically designed to accelerate computing tasks using the vast resources of Graphics Processing Units (GPUs). By allowing developers to write applications using familiar programming languages such as C, C++, and Fortran, CUDA simplifies the utilization of the GPU's powerful capabilities for general-purpose computing.

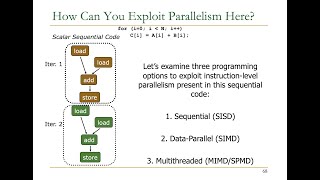

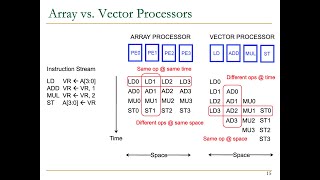

CUDA introduces a programming model where the CPU (Central Processing Unit) handles complex tasks while offloading data-parallel computations to the GPU. This division allows developers to achieve superior performance in various applications, particularly in fields like machine learning, deep learning, scientific computations, and graphics rendering. The parallel architecture of GPUs, with its thousands of cores optimized for parallel execution, is adept at handling tasks that involve large datasets and repetitive computations.

In summary, CUDA plays a crucial role in the landscape of modern computing, as it empowers developers to unlock the full potential of GPUs and deliver high-performance applications across a multitude of domains.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Overview of CUDA

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

CUDA is a parallel computing platform and programming model developed by NVIDIA that allows developers to harness the power of GPUs for general-purpose computing tasks.

Detailed Explanation

CUDA stands for Compute Unified Device Architecture. It is a framework created by NVIDIA that enables programmers to use the power of NVIDIA GPUs to solve complex computational problems. Through CUDA, developers can write applications that exploit the massive parallel processing capabilities of GPUs, allowing for speed improvements in various software tasks, especially in graphics processing and scientific computing.

Examples & Analogies

Think of CUDA like a general contractor for a construction project. Just as a contractor organizes teams of builders to work on different parts of a house simultaneously, CUDA organizes threads to perform many calculations at once on a GPU. This way, the overall project is completed much faster than if everything had to be done one step at a time.

Programming Interface

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

It provides a programming interface for writing GPU-accelerated applications using C, C++, and Fortran.

Detailed Explanation

CUDA allows developers to write programs for GPUs using familiar programming languages such as C and C++. By providing specific APIs (Application Programming Interfaces), CUDA makes it easier to implement parallel algorithms and manage memory efficiently on the GPU. This is particularly important, as writing for GPUs differs significantly from writing for CPUs due to the parallel nature of GPU architecture.

Examples & Analogies

Imagine trying to apply a recipe that requires multiple steps, like baking a cake. If you have a friend for each task—one for mixing, one for baking, and one for decorating—you can finish the cake much faster. CUDA allows programmers to distribute tasks across multiple threads, akin to having several friends helping out in the kitchen, each focusing on their job at the same time.

General-Purpose Use of GPUs

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Modern GPUs, such as NVIDIA's CUDA and AMD's ROCm, have evolved into powerful general-purpose processors capable of running parallel workloads for a variety of applications beyond graphics, including deep learning, artificial intelligence, and scientific simulations.

Detailed Explanation

Traditionally, GPUs were primarily used for rendering graphics in video games and other visual applications. However, with frameworks like CUDA, they have been adapted for general-purpose computing. This means that GPUs can now handle a wide array of computational tasks that were once the domain of CPUs, such as handling complex mathematical computations in AI and machine learning, simulations in physics, and processing large datasets efficiently.

Examples & Analogies

Consider a Swiss Army knife that can perform many functions: it has a blade, screwdriver, scissors, and more. While each tool has its primary function, they can all be utilized for various tasks due to their design. Similarly, GPUs have evolved into versatile processors capable of tackling multiple computational challenges, not just graphics.

Key Concepts

-

CUDA: NVIDIA's platform for parallel computing leveraging GPU capabilities.

-

GPU: A hardware designed for rapid computation and graphics rendering.

-

Kernel: The function executed on the GPU to perform parallel processing.

-

Host: The CPU that orchestrates actions and data transfer with the GPU.

-

Parallelism: The ability to perform numerous calculations concurrently.

Examples & Applications

Using CUDA in deep learning frameworks like TensorFlow to accelerate neural network training.

Implementing high-performance simulations in weather forecasting applications using CUDA.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

CUDA in the computing space, makes processes move at a rapid pace.

Stories

Imagine a busy chef (CPU) delegating tasks to multiple kitchen helpers (GPU), each efficiently chopping vegetables (data processing) simultaneously.

Memory Tools

C = Compute, U = Unified, D = Device, A = Architecture: Remember CUDA as the Core of Unified Data Architecture.

Acronyms

CUDA

Computations Under Diverse Architecture.

Flash Cards

Glossary

- CUDA

Compute Unified Device Architecture, a parallel computing platform and programming model developed by NVIDIA.

- GPU

Graphics Processing Unit, a specialized processor designed to accelerate rendering and computation.

- Kernel

A function in CUDA that runs on the GPU, performing parallel computations.

- Parallel Computing

A type of computation where many calculations are carried out simultaneously.

- Host

The main CPU that coordinates tasks and manages memory in CUDA.

Reference links

Supplementary resources to enhance your learning experience.