Next-Generation SIMD Extensions

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Next-Generation SIMD

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today we're going to discuss the future of SIMD, specifically the next-generation SIMD extensions. Let's start by understanding what SIMD is. Who can tell me how SIMD enhances computational efficiency?

SIMD allows a single instruction to process multiple pieces of data at once, which makes it faster for tasks that require lots of repetitive data operations.

Exactly! SIMD enables parallel processing, where the same operation is applied to multiple data elements simultaneously. Now, who can name a specific example of where SIMD is commonly used?

It's often used in graphics rendering and scientific simulations!

Great points! Now, with the emergence of applications like AI, SIMD needs to evolve. Let's move on to what next-generation SIMD extensions are.

AVX-512 and Its Advantages

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

One prominent example of next-generation SIMD extensions is AVX-512. Can anyone explain what AVX-512 stands for and its significance?

AVX-512 stands for Advanced Vector Extensions 512, which means it uses 512-bit wide vector registers for processing data.

Correct! This wider register allows for greater processing capabilities at once. Who can think of why width is important in this context?

Wider registers mean we can handle more data concurrently, which speeds up tasks significantly, especially those involving large datasets.

Right! The advantages of AVX-512 are particularly noticeable in data-heavy fields. Let's look at its implications for machine learning next.

Machine Learning and SIMD

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Machine learning is heavily reliant on SIMD for processing massive amounts of data efficiently. What tasks in machine learning do you think benefit most from SIMD features?

Tasks like training neural networks take advantage of SIMD for parallel processing of matrix multiplications.

Exactly! By exploiting SIMD, we can significantly speed up both training and inference phases in machine learning. Could anyone elaborate on how this contrasts with traditional processing?

Traditional processing would be slower since it would handle one operation at a time rather than multiple simultaneously.

Great observation! This parallelism is critical in achieving faster performance in AI applications. Let's wrap up with the future potential of GPUs incorporating quantum elements.

Future of GPUs and SIMD

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

As we look toward the future, there's speculation about GPUs potentially incorporating quantum computing elements. How do you think this might change our computational models?

If GPUs use quantum elements, they could solve problems much faster that can’t be handled efficiently by classical approaches.

That's a significant insight! Quantum computing could redefine workloads by enabling operations that were previously thought impossible. In terms of SIMD, it might allow for even more complex tasks to be handled simultaneously.

So, the efficiency we see with SIMD today could increase even more with future advances?

Precisely! The potential integration of quantum computing is an exciting prospect for the future of SIMD and overall computational capabilities.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The future of SIMD includes advanced instruction sets such as AVX-512, which provide wider vector registers and more complex operations. These advancements are driven by the increasing demands of AI and machine learning workloads, as well as the potential incorporation of quantum computing elements in future GPUs.

Detailed

Next-Generation SIMD Extensions

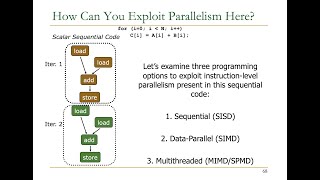

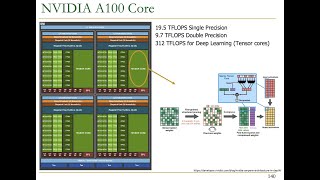

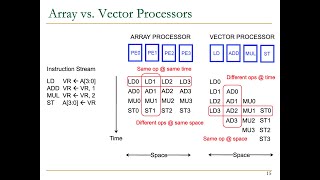

The landscape of SIMD (Single Instruction, Multiple Data) is continuously evolving to cater to the rising demands of complex computational tasks. Next-generation SIMD extensions, like Intel's AVX-512, improve performance through wider vector registers and additional advanced operations. These enhancements are particularly significant for data-intensive fields such as artificial intelligence (AI) and scientific simulations, where efficient data processing is crucial.

The surge in machine learning applications is propelling innovations that augment SIMD capabilities, allowing GPUs to accelerate training and inference processes significantly. Furthermore, as quantum computing technology advances, it is anticipated that future GPUs may integrate quantum processing techniques, potentially transforming how we tackle complex computational workloads that classical processors struggle to manage.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Next-Generation SIMD Extensions

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

New SIMD instruction sets like AVX-512 in Intel CPUs provide wider vector registers and more advanced operations to improve performance for data-intensive tasks such as AI and scientific simulations.

Detailed Explanation

Next-generation SIMD extensions, like AVX-512, enhance the capabilities of CPUs by introducing larger vector registers. A vector register is a special type of memory that can hold multiple pieces of data at once, allowing the CPU to perform operations on several data points simultaneously. When we say that AVX-512 provides wider vector registers, it means that these registers can store more data than older versions, enabling the CPU to process more information in a single operation. This is especially beneficial for demanding tasks that require handling large amounts of data, such as artificial intelligence (AI) applications and scientific computations, which often involve complex calculations on vast datasets.

Examples & Analogies

Imagine a restaurant kitchen where a chef can only prepare one dish at a time on a small cutting board. This is like an older SIMD extension with narrow vector registers. Now, visualize that the chef gets a much larger cutting board that allows him to chop multiple ingredients at once. This scenario represents the advantage of wider vector registers in next-generation SIMD extensions, enabling the CPU to manage a more considerable amount of data, thus speeding up the cooking times in high-demand situations, just like how it accelerates processing in AI or scientific simulations.

Impact of Next-Generation Extensions on Performance

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

These innovations are expected to drive further advancements in SIMD and vector processing capabilities, particularly for accelerating deep learning training and inference.

Detailed Explanation

The introduction of new SIMD instruction sets like AVX-512 not only enhances how data is processed but also significantly impacts the overall performance of various computational tasks. One of the most notable areas of improvement is in deep learning, a field that relies on processing massive datasets for training machine learning models. By allowing faster and more efficient data handling, these new SIMD extensions enable quicker training times and quicker inferences, which means that the models can learn from data more rapidly and apply that learning more efficiently in real-time situations.

Examples & Analogies

Think of a school where students are learning through group projects. In classrooms without advanced teaching methods, projects take longer to complete because teams can only work on small parts at a time. However, if the classrooms are equipped with new tools (like wider vector registers in SIMD), the students can collaborate more effectively, combining their efforts to finish projects much faster. This analogy illustrates how next-generation SIMD extensions can accelerate deep learning processes by enabling faster collaboration in data handling and processing.

Future Prospects of SIMD and GPU Technology

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The growing use of GPUs for machine learning and AI workloads is expected to drive further innovations in SIMD and vector processing capabilities.

Detailed Explanation

As the demand for machine learning and AI capabilities increases, so does the reliance on GPUs that use SIMD architecture to process information. The GPUs are optimized for performing the same instruction across many data points simultaneously, which fits perfectly with AI workloads that often require the handling of large matrices. This means that as more advanced algorithms are developed for AI, the technology behind SIMD will also evolve to improve processing speeds and efficiency. Consequently, we can anticipate more powerful, feature-rich SIMD extensions that will better support the next generation of AI applications.

Examples & Analogies

Imagine a factory that produces complex toys. As toy designs become more intricate, the factory needs to upgrade its machinery to keep up with production demands. Similarly, as the field of machine learning and AI evolves and grows, the technology behind SIMD and GPUs must also advance to meet the requirements of newer, more complex algorithms, ensuring that they can process vast amounts of data quickly and efficiently, just as the factory upgrades its machines to continue producing toys effectively.

Key Concepts

-

SIMD: A technique used for parallel processing of multiple data points simultaneously.

-

AVX-512: An extension of SIMD that allows for wider vector registers, enhancing processing capabilities.

-

Machine Learning: A field significantly benefitting from the advancements in SIMD extensions.

-

Quantum Computing: Future technology that may integrate with SIMD architectures to solve complex computational challenges.

Examples & Applications

Using AVX-512 to accelerate matrix multiplications in deep neural networks.

Exploiting SIMD for enhanced performance in video rendering tasks.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

SIMD’s speed, a processing need, handling data in great deed.

Stories

Imagine a school where each student (data point) learns the same lesson (instruction) at the same time using different materials; this is SIMD at work.

Memory Tools

S.O.C. - SIMD Over Clock: Remember the essential three elements of SIMD - Single instruction, Over many data, Concurrently.

Acronyms

A.V.E. - Advanced Vector Execution

simple way to remember how next-generation SIMD works — executing advanced instructions with wider vectors.

Flash Cards

Glossary

- SIMD

Single Instruction, Multiple Data; a parallel computing model that processes multiple data elements with a single instruction.

- AVX512

Advanced Vector Extensions 512; a set of SIMD instructions for Intel processors that enhance performance through wider vector registers.

- Machine Learning

A subset of artificial intelligence focused on the development of algorithms that allow computers to learn from data.

- Quantum Computing

A type of computation that leverages the principles of quantum mechanics to process information at unprecedented speeds.

- Deep Learning

A class of machine learning algorithms that use multiple layers to extract increasingly abstract features from data.

Reference links

Supplementary resources to enhance your learning experience.