General-Purpose GPUs (GPGPUs)

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to GPGPUs

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we will delve into General-Purpose GPUs, or GPGPUs. Can anyone tell me what makes a GPGPU different from a traditional GPU?

Are they used for more than just rendering graphics?

Exactly! GPGPUs have evolved to perform a wide range of computation tasks, such as scientific simulations and machine learning, alongside graphics. They utilize their parallel processing capabilities to handle large volumes of data efficiently.

So they have multiple cores that can work at the same time?

That's right! GPUs have hundreds or even thousands of small processing cores designed for parallel execution. This architecture is perfect for executing thousands of threads simultaneously, something CPUs struggle with.

What are some examples of tasks that use GPGPUs?

Great question! Tasks like deep learning for AI, image processing, and even simulations in physics and chemistry can leverage GPGPUs. They make it possible to run complex computations much faster than traditional CPUs.

Is there a programming model specifically for GPGPUs?

Yes! NVIDIA has CUDA, while AMD has ROCm. These frameworks allow developers to write code that can efficiently utilize the processing capabilities of GPGPUs.

To summarize, GPGPUs are powerful tools not just for graphics rendering but also for a wide range of computational tasks, primarily due to their parallel processing architecture.

Comparison of CPU and GPU

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

How do you think GPUs differ from CPUs in terms of performance?

I think CPUs are better for single-threaded tasks?

That's correct! CPUs are optimized for tasks requiring high single-threaded performance, while GPUs excel at parallelism. They can perform simple operations across large datasets simultaneously.

So for tasks like video rendering or AI training, a GPU is a better choice?

Precisely! In these cases, the GPU can drastically reduce computation time due to its ability to handle many operations at once.

Do you have an example of when a CPU might be preferable to use?

Certainly! Tasks like running operating systems or applications that require complex decision-making might benefit from a CPU's architecture.

In summary, the key difference is that CPUs focus on managing tasks with complex branching and high sequential performance, while GPUs shine in scenarios that demand processing large parallel workloads.

Applications of GPGPUs

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s talk about some applications of GPGPUs. Can anyone think of fields where GPGPUs are used?

I know they are used in AI for training models.

That's a major application! GPGPUs help with the heavy computations involved in training deep learning models, handling the matrix multiplications efficiently.

What about in scientific research?

Good point! Fields such as genomics and physics often utilize GPGPUs to run simulations that analyze large data sets quickly.

Do GPGPUs have an impact on real-time graphics?

Absolutely! GPGPUs enable real-time rendering in gaming and graphical applications, enhancing visual experiences.

What’s the takeaway for developers?

The takeaway is that understanding how to leverage GPGPUs can significantly enhance application performance in various fields, paving the way for innovation.

In summary, GPGPUs have broad applications across various fields, including machine learning, scientific research, and real-time graphics.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section discusses the evolution of GPUs into General-Purpose Graphics Processing Units (GPGPUs), highlighting their capabilities in handling a wide range of computational tasks beyond graphics, including deep learning and scientific simulations. It contrasts the architecture of GPUs with traditional CPUs, emphasizing the GPU's ability to execute thousands of threads simultaneously.

Detailed

General-Purpose GPUs (GPGPUs)

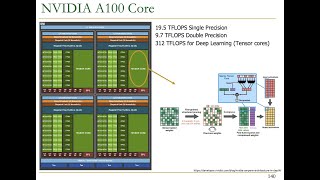

General-Purpose Graphics Processing Units (GPGPUs) represent a significant evolution in GPU technology, allowing these powerful processors to undertake a variety of computational tasks traditionally reserved for the CPU. GPGPUs leverage their massively parallel architectures, consisting of hundreds or thousands of small processing cores, to efficiently handle high-volume data processing.

GPGPUs can execute multiple threads simultaneously, making them suitable for tasks such as deep learning, artificial intelligence, and scientific simulations. NVIDIA’s CUDA and AMD’s ROCm are two notable platforms that enable this functionality, providing programming models that help developers optimize their applications for GPU accelerations. The section contrasts the operation of CPUs and GPUs, underscoring how GPUs excel in handling parallel tasks involving simple operations on large datasets. The adoption of GPGPUs is accelerating the advancements in computational fields, particularly in machine learning and other data-intensive areas.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to GPGPUs

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Modern GPUs, such as NVIDIA's CUDA and AMD's ROCm, have evolved into powerful general-purpose processors capable of running parallel workloads for a variety of applications beyond graphics, including deep learning, artificial intelligence, and scientific simulations.

Detailed Explanation

General-Purpose GPUs (GPGPUs) are advanced graphics processing units that have shifted their focus from purely rendering graphics to performing computation for a wide array of tasks. This evolution means that GPGPUs can handle complex calculations needed in various fields such as deep learning, where they quickly process large amounts of data. Unlike traditional GPUs that were mainly used for graphics, GPGPUs are designed to tackle general tasks, applying their parallel processing capabilities to fields like AI and scientific simulations.

Examples & Analogies

Think of GPGPUs like a Swiss Army knife: while originally intended for specific tasks (like cutting or screwing), its various tools enable it to accomplish many different tasks, making it versatile for general use in various scenarios.

Hardware and Architecture

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

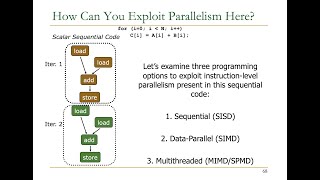

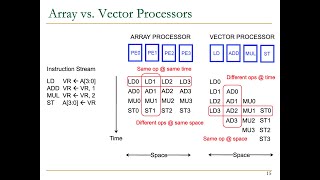

GPUs consist of hundreds or thousands of small processing cores, each capable of executing instructions in parallel. These cores are optimized for SIMD-style operations, enabling them to process large datasets efficiently.

Detailed Explanation

The architecture of GPGPUs is designed to support thousands of small cores that can execute many instructions simultaneously. This parallel processing capability is critical for efficiently handling large datasets. By optimizing for SIMD (Single Instruction, Multiple Data) operations, these cores can perform the same operation on multiple data points at once, which is ideal for tasks like matrix calculations and image processing where many similar operations are conducted on large volumes of data.

Examples & Analogies

Imagine a factory assembly line where each worker is responsible for performing the same task on different products simultaneously. Instead of one worker completing the task on all products sequentially, multiple workers working together can complete the task much faster. This assembly line setup allows GPGPUs to handle data processing effectively.

Comparison with CPUs

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

CPUs are designed for single-threaded performance and general-purpose computation, whereas GPUs are designed for parallelism and can execute thousands of threads simultaneously.

Detailed Explanation

CPUs (Central Processing Units) are optimized to handle a few tasks sequentially and are great at managing complex instructions and control flows. In contrast, GPGPUs are designed for executing many simple operations simultaneously, allowing them to perform parallel computations exceptionally well. This architecture is what makes GPUs substantially more efficient than CPUs for tasks that can be parallelized, such as data processing and machine learning algorithms.

Examples & Analogies

Think of a CPU like a skilled chef who prepares one exquisite dish at a time, requiring a lot of attention to detail. Meanwhile, a GPGPU resembles a large catering team where many cooks simultaneously prepare multiple simple dishes, allowing for a large quantity of food to be prepared quickly for an event.

Applications in AI and Machine Learning

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

GPUs are commonly used for accelerating machine learning models, particularly deep learning, where tasks such as matrix multiplication and convolution operations can be parallelized.

Detailed Explanation

In the field of artificial intelligence and machine learning, particularly in deep learning, many complex mathematical calculations are necessary. GPUs are well-suited for these tasks because they can perform these calculations simultaneously across many data points. For instance, in training neural networks, operations such as matrix multiplication can be computed in parallel on the GPU, leading to significantly faster training times compared to using a CPU.

Examples & Analogies

Imagine training a sports team where each player works on different skills at the same time rather than waiting for their turn. Using a GPU for machine learning is like having a large team of trainers working simultaneously on different aspects of a player’s skill, allowing for faster improvement overall.

Key Concepts

-

GPGPU: A GPU utilized for general-purpose computations.

-

CUDA: A programming interface for GPGPU development.

-

Massive Parallelism: Ability to handle thousands of threads simultaneously.

-

GPU vs. CPU: Understanding the fundamental differences in processing architectures.

Examples & Applications

Training deep learning models significantly faster using GPGPUs compared to CPUs.

Performing complex scientific simulations that require rapid processing of large datasets.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

GPGPU, it’s not just for scenes, it's for deep learning mean machines.

Stories

Imagine a busy restaurant where chefs (GPU cores) prepare multiple dishes (data points) simultaneously, making the food ready faster, just like how GPGPUs handle many tasks at once.

Memory Tools

GPGPU - Graphics Plus General Use Power!

Acronyms

GPGPU

General-purpose

Graphics

Processing

Unit - a versatile device!

Flash Cards

Glossary

- GPGPU

General-Purpose Graphics Processing Unit; a GPU designed to perform general computations beyond graphics rendering.

- CUDA

Compute Unified Device Architecture; a parallel computing platform and programming model created by NVIDIA.

- ROCm

Radeon Open Compute; a programming model similar to CUDA developed by AMD.

- Massive Parallelism

The capability of GPUs to handle many operations and threads simultaneously.

- Thread

A sequence of programmed instructions that can be managed independently by a scheduler.

Reference links

Supplementary resources to enhance your learning experience.