GPU Architecture

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to GPU Architecture

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're going to learn about GPU architecture. Can anyone tell me what a GPU is?

Isn't it a Graphics Processing Unit used mainly for graphics and gaming?

That's right! GPUs are specialized processors designed to accelerate graphics rendering, but they also excel at parallel processing tasks. They contain hundreds or thousands of small processing cores.

How do these cores make GPUs better at certain tasks than CPUs?

Great question! While CPUs have a few powerful cores optimized for general-purpose processing, GPUs have many smaller cores that can process multiple tasks simultaneously. This architecture is especially effective for tasks like deep learning.

So, would that mean they’re just better overall?

Not necessarily better overall. GPUs are ideal for parallel tasks, while CPUs handle more complex tasks with less parallelism efficiently. It's all about using the right tool for the task!

Can you give us an example of a task that GPUs perform really well?

Certainly! Think about training a neural network: it involves processing large amounts of data and performing many repetitive calculations. GPUs handle that workload exceptionally well.

To recap, GPUs consist of many small cores designed for parallel processing, which makes them suitable for high-performance computing tasks but not necessarily superior for every computing need.

General-Purpose GPUs (GPGPU)

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's talk about General-Purpose GPUs, or GPGPUs. What do you all think makes a GPU general-purpose?

Maybe because they can do more than just graphics?

Exactly! Modern GPUs, like NVIDIA's CUDA and AMD's ROCm, allow them to perform tasks beyond just rendering graphics, such as scientific simulations and artificial intelligence applications.

So does that mean programming these GPUs is different from programming CPUs?

Yes, programming GPUs typically requires different programming models, such as CUDA or OpenCL, which facilitate parallel programming to leverage the power of these processors effectively.

I heard that GPUs are very popular for machine learning—why is that?

Exactly! Machine learning tasks, like matrix multiplication used in neural networks, can be efficiently parallelized, making GPUs a preferred choice for accelerating these computations.

In summary, GPGPUs expand the functionality of GPUs to general-purpose computing by using specialized programming models to manage parallel tasks effectively.

Comparison Between GPUs and CPUs

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's compare GPUs and CPUs. Who can tell me the main difference in their architectures?

CPUs have fewer cores but are more powerful, while GPUs have more core but that are less powerful individually, right?

Yes! CPUs have higher clock speeds and are optimized for single-threaded tasks, while GPUs are designed for high parallelism with many cores executing the same instruction at once.

Does it mean that GPUs can’t perform tasks that require more complex computations?

Not at all! GPUs can tackle complex computations, but they do it by breaking tasks into smaller, simpler ones that can be executed simultaneously across many cores.

So when do we use CPUs instead of GPUs?

CPUs are better for tasks that require quick, complex decision-making or tasks with a lot of different instructions. That’s why both CPUs and GPUs are essential in a computer system. They complement each other.

To summarize, while CPUs focus on few complex tasks, GPUs excel at a large number of simpler parallel tasks, making them ideal for specific applications.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

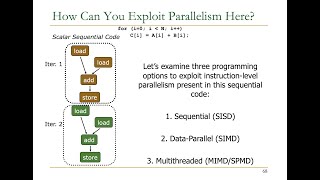

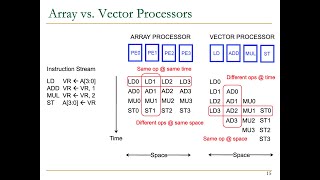

The GPU architecture consists of numerous small processing cores optimized for executing instructions in parallel under a SIMD model, making them suitable for graphics rendering, scientific simulations, and machine learning. This section examines the functional similarities and differences between GPUs and CPUs, highlighting the advantages of GPUs in handling large-scale data operations.

Detailed

GPU Architecture

GPUs, or Graphics Processing Units, are specialized hardware that plays a critical role in high-performance computing tasks. Characterized by hundreds or thousands of small processing cores, these units excel in executing multiple instructions simultaneously, leveraging a Single Instruction, Multiple Data (SIMD) model. In this section, we explore how this architecture allows GPUs to handle complex tasks such as graphics rendering, scientific simulations, and machine learning effectively.

Key Components of GPU Architecture

- Processing Cores: Unlike CPUs, which are designed for single-threaded performance, GPUs operate with massive parallelism in mind, capable of handling thousands of threads concurrently. This makes them exceptionally efficient for processing large datasets where operations can be performed simultaneously.

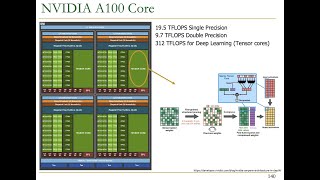

- General-Purpose GPUs (GPGPU): Modern GPUs have evolved to become general-purpose processors. Technologies like NVIDIA's CUDA and AMD's ROCm allow GPUs to execute parallel workloads for a variety of applications beyond just graphics tasks, such as deep learning and artificial intelligence.

- Comparative Performance: Understanding how GPUs differ from CPUs in terms of architecture and capabilities is crucial. CPUs excel at handling diverse tasks with lower latency, while GPUs shine in scenarios requiring simultaneous execution of similar tasks over vast datasets—ideal for vector and matrix computations relevant in deep learning applications.

In summary, the architecture of GPUs underscores their pivotal role in advancing computational capabilities across various fields, making them indispensable for modern applications.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Overview of GPU Architecture

Chapter 1 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

GPUs are specialized hardware accelerators designed to handle large-scale parallel computations, making them ideal for tasks such as graphics rendering, scientific simulations, and machine learning.

Detailed Explanation

GPUs, or Graphics Processing Units, are specially designed pieces of hardware that excel at performing many calculations simultaneously. This ability to handle multiple tasks at once makes them perfect for demanding activities like rendering graphics in video games or tracking scientific data in real-time simulations. They are built to perform operations on large data sets efficiently, which distinguishes them from traditional processors.

Examples & Analogies

Think of a GPU as a busy restaurant kitchen. Just like a kitchen has many chefs working in parallel to prepare different meals at the same time, a GPU has many smaller processing cores that work together to process multiple data tasks simultaneously.

Parallel Processing Cores

Chapter 2 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

GPU architecture consists of hundreds or thousands of small processing cores, each capable of executing instructions in parallel. These cores are optimized for SIMD-style operations, enabling them to process large datasets efficiently.

Detailed Explanation

A key feature of GPU architecture is its numerous small processors or cores. These cores can execute instructions at the same time, which is known as parallel processing. This architecture is particularly optimized for SIMD (Single Instruction, Multiple Data) operations, meaning the same instruction can be applied to many data points concurrently. This allows for quick calculations and efficient data handling, especially in tasks involving complex graphics and calculations.

Examples & Analogies

Imagine watching a team of assembly line workers. Each worker (core) does a specific part of the task, such as painting parts of a car. While one person paints the door, another person works on the hood, and so on. This division of labor allows the entire car to be completed much faster than if a single person had to do all these tasks sequentially.

General-Purpose GPUs (GPGPUs)

Chapter 3 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Modern GPUs, such as NVIDIA's CUDA and AMD's ROCm, have evolved into powerful general-purpose processors capable of running parallel workloads for a variety of applications beyond graphics, including deep learning, artificial intelligence, and scientific simulations.

Detailed Explanation

Modern GPUs are not limited to just graphics processing; they have evolved into General-Purpose GPUs (GPGPUs), which can perform a wide range of computational tasks. Technologies like NVIDIA's CUDA allow developers to use GPU power for machine learning, data analysis, and more. This versatility highlights the strength of GPUs in handling a variety of parallel workloads, making them an essential tool beyond traditional graphics applications.

Examples & Analogies

Consider the evolution of a Swiss Army knife, which is designed to be multifunctional. Just like this tool can serve various purposes such as cutting, screwing, and opening bottles, a GPGPU can harness the computing power for multiple applications, like running simulations or training AI models.

GPU vs. CPU

Chapter 4 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

CPUs are designed for single-threaded performance and general-purpose computation, whereas GPUs are designed for parallelism and can execute thousands of threads simultaneously.

Detailed Explanation

CPUs, or Central Processing Units, are the main processors in computers, designed for efficiently handling tasks that require a lot of instruction processing but typically one at a time. In contrast, GPUs are optimized for handling many tasks concurrently, which allows them to manage vast amounts of parallel workloads. This makes them particularly suitable for tasks requiring simultaneous processing of data, such as video rendering or complex calculations.

Examples & Analogies

Think of a CPU as a skilled writer who can produce a quality novel page by page—thorough but sequential. In contrast, a GPU is like a film crew that can shoot multiple scenes at the same time, leading to a faster completion of a project than if one person were to do it all alone.

Massive Parallelism in GPUs

Chapter 5 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Massive Parallelism: GPUs can handle highly parallel tasks that involve simple operations on large amounts of data, making them ideal for vector and matrix computations in deep learning and graphics rendering.

Detailed Explanation

Massive parallelism refers to the GPU’s ability to handle enormous tasks that can be broken down into smaller parts executed simultaneously. This capability is particularly valuable in deep learning, where operations like matrix multiplications become significantly faster when executed in parallel due to the GPU's architectural design. It allows for processing vast datasets efficiently, which is crucial in fields such as artificial intelligence.

Examples & Analogies

Imagine a massive construction project. Instead of one person building a house all by themselves, a large crew shows up: carpenters, electricians, and plumbers working on different parts of the house at the same time. This coordination allows for the project to be completed much faster than if it were built sequentially.

Key Concepts

-

GPU: A specialized processor for accelerating graphics and parallel computing tasks.

-

GPGPU: Utilizing GPUs for general-purpose computing beyond just graphics rendering.

-

SIMD: A processing architecture allowing a single instruction to work on multiple data elements simultaneously.

-

CUDA: A parallel computing platform by NVIDIA for leveraging GPU capabilities in general computing.

Examples & Applications

GPU usage in rendering complex graphics in video games.

Using GPGPU for training deep learning models in artificial intelligence.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

GPUs go fast, with many cores in a blast!

Stories

Imagine a chef (GPU) who has many assistants (cores). While one chef (CPU) prepares a gourmet meal uniquely, the chef with assistants finishes many dishes quickly, showing that teamwork (parallel processing) leads to productivity!

Memory Tools

To remember GPU functions: 'Gargantuan Pairs Unveil power'.

Acronyms

GPU - 'Grocery Pick Unit'

Picking up data from shelves (memory) and delivering it swiftly!

Flash Cards

Glossary

- GPU

Graphics Processing Unit, a specialized processor designed to accelerate rendering of graphics.

- GPGPU

General-Purpose Graphics Processing Unit, allowing GPUs to perform computing tasks beyond graphics rendering.

- SIMD

Single Instruction, Multiple Data, a parallel computing model where a single instruction performs the same operation on multiple data.

- CUDA

Compute Unified Device Architecture, a parallel computing platform and programming model created by NVIDIA.

- Core

A single processing unit within the GPU that can execute instructions.

Reference links

Supplementary resources to enhance your learning experience.